Elixir side-project #2: A Graphql API with Elixir (2/2)

A first proof of concept for my side-project to manage and order recipes and ingredients via a supermarket API was successful. I continued with making a GraphQL API and a web interface with next.js + Apollo.

This is part 2 of a blog post series Elixir side-project #2.

- Planning Recipes with a Supermarket API (1/2)

- A Graphql API with Elixir (2/2)

GraphQL and Elixir

I assume you’ve read about GraphQL. Otherwise I recommend to read an introduction or watch a talk. If you want to learn more about Elixir see my blog post why the Elixir language has great potential.

The most used GraphQL library for Elixir is Absinthe. The maintainers are very active on the

#absinthe-graphql Slack channel and they are writing a book about Absinthe.

Installation

I'm using the currently latest Absinthe version which is 1.4-rc and also some helper libraries. absinthe_relay needs to be updated to 1.4 which is why I use override: true. In the mix.exs file I put these dependencies:

{:absinthe, "~> 1.4.0-rc", override: true},{:absinthe_plug, "~> 1.4.0-rc"},{:absinthe_phoenix, "~> 1.4.0-rc"},{:absinthe_relay, "~> 1.3.0"},absinthe_plugfor integration with plug and adding GraphiQL, setupabsinthe_phoenixfor playing with GraphQL subscriptions, setupabsinthe_relaybecause I like to expose only Global IDs. This enables automatic store updates using Apollo.

Schema Definition

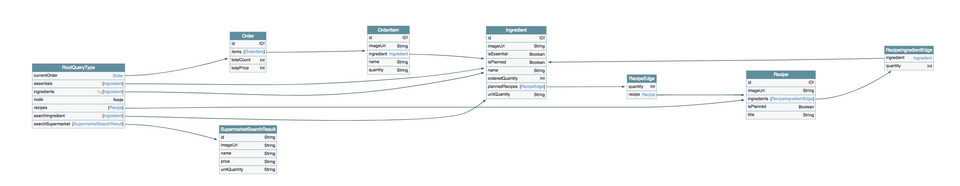

The Picape domain is small enough to define in two files: schema.ex and types.ex. Here is a query schema overview made with GraphQL Voyager (click the image to enlarge).

Absinthe provides macros to make schema definitions concise. For example the query macro can be used to define the root query fields. In the following example we define a field "recipes" using the field macro. That field resolves to a list of recipes using the resolve macro.

query do @desc "Lists all recipes." field :recipes, list_of(:recipe) do resolve &Resolver.Recipe.all/3 endendSource code of the whole schema

The types file defines all object types using the object macro. In this case I used the node macro which defines a Relay node interface. This just means that this object will get an id field with a globally unique ID.

node object :recipe do field :title, :string field :image_url, :string field :is_planned, :boolean do resolve: batched({Resolver.Order, :recipies_planned?}) end field :ingredients, list_of(:recipe_ingredient_edge) do resolve: batched({Resolver.Recipe, :ingredients_by_recipe_ids}) endendResolvers take care of mapping the GraphQL schema to actual data. I see resolvers as glue code – the actual logic of how to fetch data should be in another layer of the application. I liked combining this with the concept of Phoenix 1.3 contexts. This way my resolvers are mostly one or two line calls to the relevant contexts.

defmodule PicapeWeb.Graphql.Resolver.Recipe do alias Picape.Recipe def all(_parent, _args, _info) do {:ok, Recipe.list_recipes()} endendSource code of all recipe resolvers

Phoenix 1.3 Contexts

Phoenix 1.3 introduced a concept called contexts as way to structure and design an Elixir application.

A context groups related functionality. For example the context Recipes can include functions like list_recipes or recipes_by_ids. The function list_recipes lists all recipes and can be used in many places: in a GraphQL resolver, in a Phoenix controller or in a CLI command.

defmodule Picape.Recipes do def list_recipes() do Repo.all(from recipe in Recipe) endendIn OOP languages I often use DDD concepts like repositories, services, entities and value objects. I still have to figure out how this relates to Phoenix contexts. If you know a good comparison, please let me know.

Batching

I knew that at some point I had to take care of the n + 1 problem. In Javascript and PHP I have used a DataLoader. Absinthe offers batching for this. For example to fetch all ingredients for recipes the resolver for the ingredients field looks like this:

node object :recipe do field :ingredients, list_of(:recipe_ingredient_edge) do resolve: batched({Resolver.Recipe, :ingredients_by_recipe_ids}) endendbatched is a helper function that I've added. It takes a tuple in the form of {Module, :function_name} and passes it to the batch function from Absinthe. The batch function calls this tuple with a list of all recipe ids that need their ingredients. This way there will be only one database query instead of one for every recipe.

defp batched(batch_fun) do fn parent, _args, _ctx -> batch(batch_fun, parent.id, fn results -> {:ok, batch_results} = results {:ok, Map.get(batch_results, parent.id)} end) endendParallel testing

I'm using the PostgreSQL database and Ecto, a popular database wrapper for Elixir. Ecto has a concept called Sandbox, which enables database tests to run in parallel. This works by wrapping every database connection by a test in a transaction and rolling it back when the tests finishes. All database changes are then isolated to the test itself.

Using the Ecto Sandbox for GraphQL tests turned out to be straight forward. I made an ExUnit test case AbsintheCase used by all functional tests of the GraphQL API. This test case defines some helpers and includes the DataCase with async: true which takes care of handling database transactions per test.

I also used a Factory to make fixtures for Ecto. Fixtures are local to each test. I've seen global fixtures (e.g. database seeds) used for tests like this. The problem with this is, that then many tests depend on the same global fixtures. This makes the fixtures hard to change and maintain.

test "returns a list of essentials" do # Arrange: Insert fixture data into the database insert! :essential, name: "Flour" insert! :essential, name: "Milk" # Act: Run GraphQL query actual = run("{ essentials { name } }") # Assert: Verify the response assert actual === {:ok, %{data: %{ "essentials" => [ %{ "name" => "Flour" }, %{ "name" => "Milk" }, ] }}}endI'm still growing the GraphQL tests. So far, running all tests took under 0.5 seconds. I consider this very fast for functional tests.

The Future

So far I really like how GraphQL works in Elixir with Absinthe. I'm very happy with the speed of the functional tests and how concise the schema and type definitions are.

I'll play with subscriptions and the async middleware next.